Introduction to Computer Organization

Computer Architecture

To understand digital signal processing systems, we must understand a little about how computers compute. The modern definition of a computer is an electronic device that performs calculations on data, presenting the results to humans or other computers in a variety of (hopefully useful) ways.

Organization of a Simple Computer

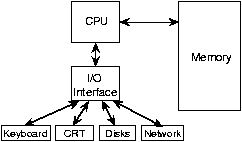

The generic computer contains input devices (keyboard, mouse, A/D (analog-to-digital) converter, etc.), a computational unit, and output devices (monitors, printers, D/A converters). The computational unit is the computer's heart, and usually consists of a central processing unit (CPU), a memory, and an input/output (I/O) interface. What I/O devices might be present on a given computer vary greatly.

- A simple computer operates fundamentally in discrete time. Computers are clocked devices, in which computational steps occur periodically according to ticks of a clock. This description belies clock speed: When you say "I have a 1 GHz computer," you mean that your computer takes 1 nanosecond to perform each step. That is incredibly fast! A "step" does not, unfortunately, necessarily mean a computation like an addition; computers break such computations down into several stages, which means that the clock speed need not express the computational speed. Computational speed is expressed in units of millions of instructions/second (Mips). Your 1 GHz computer (clock speed) may have a computational speed of 200 Mips.

- Computers perform integer (discrete-valued) computations. Computer calculations can be numeric (obeying the laws of arithmetic), logical (obeying the laws of an algebra), or symbolic (obeying any law you like).1 Each computer instruction that performs an elementary numeric calculation --- an addition, a multiplication, or a division --- does so only for integers. The sum or product of two integers is also an integer, but the quotient of two integers is likely to not be an integer. How does a computer deal with numbers that have digits to the right of the decimal point? This problem is addressed by using the so-called floating-point representation of real numbers. At its heart, however, this representation relies on integer-valued computations.

Representing Numbers

Focusing on numbers, all numbers can represented by the positional notation system. 2 The

-ary positional representation system uses the position of digits ranging from 0 to

-1 to denote a number. The quantity

is known as the base of the number system. Mathematically, positional systems represent the positive integer

as

and we succinctly express

in base-

as

. The number 25 in base 10 equals

, so that the digits representing this number are

,

, and all other

equal zero. This same number in binary (base 2) equals 11001 (

) and 19 in hexadecimal (base 16). Fractions between zero and one are represented the same way.

All numbers can be represented by their sign, integer and fractional parts. Complex numbers can be thought of as two real numbers that obey special rules to manipulate them.

Humans use base 10, commonly assumed to be due to us having ten fingers. Digital computers use the base 2 or binary number representation, each digit of which is known as a bit (binary digit).

Number representations on computers

Here, each bit is represented as a voltage that is either "high" or "low," thereby representing "1" or "0," respectively. To represent signed values, we tack on a special bit—the sign bit—to express the sign. The computer's memory consists of an ordered sequence of bytes, a collection of eight bits. A byte can therefore represent an unsigned number ranging from

to

. If we take one of the bits and make it the sign bit, we can make the same byte to represent numbers ranging from

to

. But a computer cannot represent all possible real numbers. The fault is not with the binary number system; rather having only a finite number of bytes is the problem. While a gigabyte of memory may seem to be a lot, it takes an infinite number of bits to represent

. Since we want to store many numbers in a computer's memory, we are restricted to those that have a finite binary representation. Large integers can be represented by an ordered sequence of bytes. Common lengths, usually expressed in terms of the number of bits, are 16, 32, and 64. Thus, an unsigned 32-bit number can represent integers ranging between 0 and

(4,294,967,295), a number almost big enough to enumerate every human in the world!3

Exercise

For both 32-bit and 64-bit integer representations, what are the largest numbers that can be represented if a sign bit must also be included.

For

-bit signed integers, the largest number is

. For

, we have 2,147,483,647 and for

, we have 9,223,372,036,854,775,807 or about

.

While this system represents integers well, how about numbers having nonzero digits to the right of the decimal point? In other words, how are numbers that have fractional parts represented? For such numbers, the binary representation system is used, but with a little more complexity. The floating-point system uses a number of bytes - typically 4 or 8 - to represent the number, but with one byte (sometimes two bytes) reserved to represent the exponent

of a power-of-two multiplier for the number - the mantissa

- expressed by the remaining bytes.

The mantissa is usually taken to be a binary fraction having a magnitude in the range

, which means that the binary representation is such that

. 4 The number zero is an exception to this rule, and it is the only floating point number having a zero fraction. The sign of the mantissa represents the sign of the number and the exponent can be a signed integer.

A computer's representation of integers is either perfect or only approximate, the latter situation occurring when the integer exceeds the range of numbers that a limited set of bytes can represent. Floating point representations have similar representation problems: if the number

can be multiplied/divided by enough powers of two to yield a fraction lying between 1/2 and 1 that has a finite binary-fraction representation, the number is represented exactly in floating point. Otherwise, we can only represent the number approximately, not catastrophically in error as with integers. For example, the number 2.5 equals

, the fractional part of which has an exact binary representation. 5 However, the number

does not have an exact binary representation, and only be represented approximately in floating point. In single precision floating point numbers, which require 32 bits (one byte for the exponent and the remaining 24 bits for the mantissa), the number 2.6 will be represented as

. Note that this approximation has a much longer decimal expansion. This level of accuracy may not suffice in numerical calculations. Double precision floating point numbers consume 8 bytes, and quadruple precision 16 bytes. The more bits used in the mantissa, the greater the accuracy. This increasing accuracy means that more numbers can be represented exactly, but there are always some that cannot. Such inexact numbers have an infinite binary representation.6 Realizing that real numbers can be only represented approximately is quite important, and underlies the entire field of numerical analysis, which seeks to predict the numerical accuracy of any computation.

Exercise

What are the largest and smallest numbers that can be represented in 32-bit floating point? in 64-bit floating point that has sixteen bits allocated to the exponent? Note that both exponent and mantissa require a sign bit.

In floating point, the number of bits in the exponent determines the largest and smallest representable numbers. For 32-bit floating point, the largest (smallest) numbers are

(

). For 64-bit floating point, the largest number is about

.

So long as the integers aren't too large, they can be represented exactly in a computer using the binary positional notation. Electronic circuits that make up the physical computer can add and subtract integers without error. (This statement isn't quite true; when does addition cause problems?)

Computer Arithmetic and Logic

The binary addition and multiplication tables are

Note that if carries are ignored,7 subtraction of two single-digit binary numbers yields the same bit as addition. Computers use high and low voltage values to express a bit, and an array of such voltages express numbers akin to positional notation. Logic circuits perform arithmetic operations.

Exercise

Add twenty-five and seven in base 2. Note the carries that might occur. Why is the result "nice"?

and

. We find that

.

The variables of logic indicate truth or falsehood.

, the AND of

and

, represents a statement that both

and

must be true for the statement to be true. You use this kind of statement to tell search engines that you want to restrict hits to cases where both of the events

and

occur.

, the OR of

and

and

, yields a value of truth if either is true. Note that if we represent truth by a "1" and falsehood by a "0," binary multiplication corresponds to AND and addition (ignoring carries) to XOR. XOR, the exclusive or operator, equals the union of

and

. The Irish mathematician George Boole discovered this equivalence in the mid-nineteenth century. It laid the foundation for what we now call Boolean algebra, which expresses as equations logical statements. More importantly, any computer using base-2 representations and arithmetic can also easily evaluate logical statements. This fact makes an integer-based computational device much more powerful than might be apparent.

Footnotes

- 1

An example of a symbolic computation is sorting a list of names.

- 2

Alternative number representation systems exist. For example, we could use stick figure counting or Roman numerals. These were useful in ancient times, but very limiting when it comes to arithmetic calculations: ever tried to divide two Roman numerals?

- 3

You need one more bit to do that.

- 4

In some computers, this normalization is taken to an extreme: the leading binary digit is not explicitly expressed, providing an extra bit to represent the mantissa a little more accurately. This convention is known as the hidden-ones notation.

- 5

See if you can find this representation.

- 6

Note that there will always be numbers that have an infinite representation in any chosen positional system. The choice of base defines which do and which don't. If you were thinking that base 10 numbers would solve this inaccuracy, note that

has an infinite representation in decimal (and binary for that matter), but has finite representation in base 3.

- 7

A carry means that a computation performed at a given position affects other positions as well. Here,

is an example of a computation that involves a carry.

This textbook is open source. Download for free at http://cnx.org/contents/778e36af-4c21-4ef7-9c02-dae860eb7d14@9.72.

Explore CircuitBread

Get the latest tools and tutorials, fresh from the toaster.